Content-Adaptive Style Transfer: A Training-Free Approach with VQ Autoencoders

Asian Conference on Computer Vision (ACCV), Dec 2024

Abstract

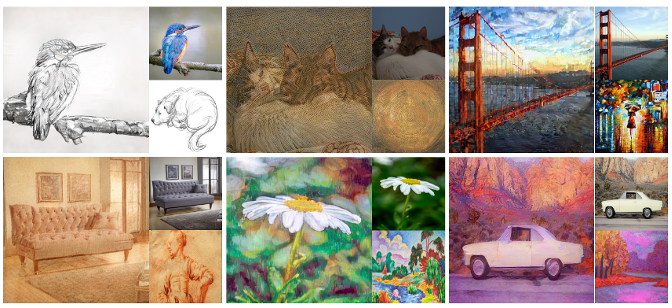

We introduce Content-Adaptive Style Transfer (CAST), a novel training-free approach for arbitrary style transfer that enhances visual fidelity using vector quantized-based pretrained autoencoder. Our method systematically applies coherent stylization to corresponding content regions. It starts by capturing the global structure of images through vector quantization, then refines local details using our style-injected decoder. CAST consists of three main components: a content-consistent style injection module, which tailors stylization to unique image regions; an adaptive style refinement module, which fine-tunes stylization intensity; and a content refinement module, which ensures content integrity through interpolation and feature distribution maintenance. Experimental results indicate that CAST outperforms existing generative-based and traditional style transfer models in both quantitative and qualitative measures.

Links

- Paper: ACCV 2024

Citation

1

2

3

4

5

6

@inproceedings{gim2024cast,

title={Content-Adaptive Style Transfer: A Training-Free Approach with VQ Autoencoders},

author={Gim, Jongmin and Park, JiHun and Lee, Kyoungmin and Im, Sunghoon},

booktitle={Asian Conference on Computer Vision (ACCV)},

year={2024}

}