A Training-Free Style-Personalization via SVD-Based Feature Decomposition

arXiv preprint

Abstract

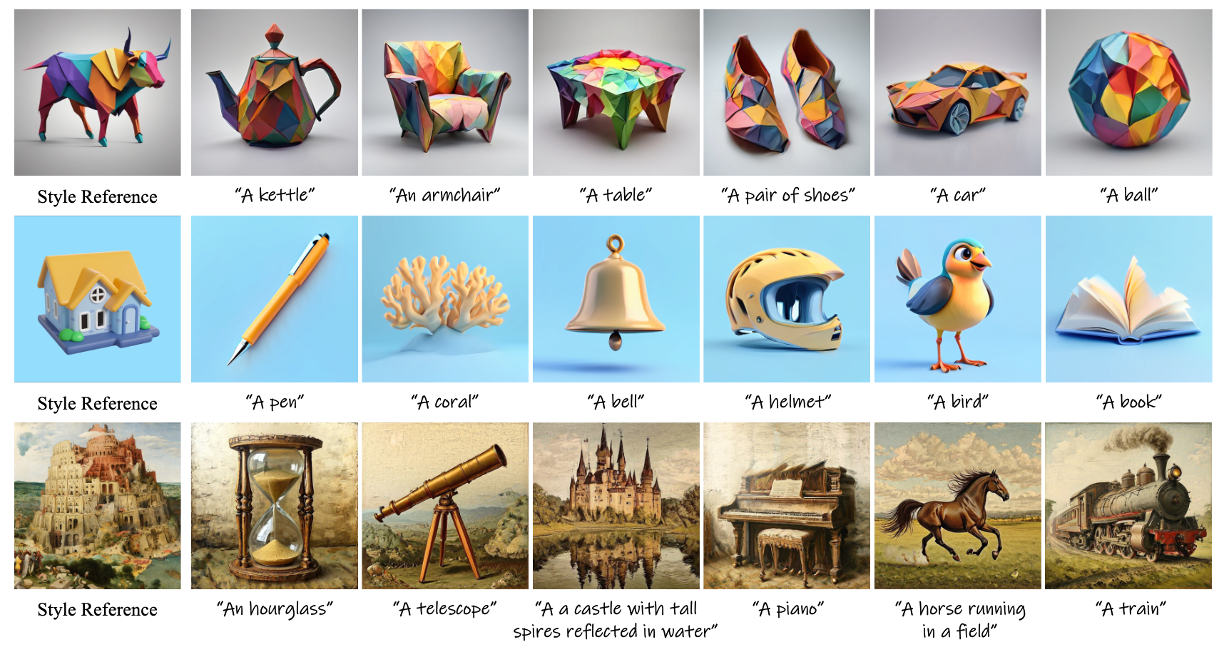

We present a training-free framework for style-personalized image generation that operates during inference using a scale-wise autoregressive model. Our method generates a stylized image guided by a single reference style while preserving semantic consistency and mitigating content leakage. Through a detailed step-wise analysis of the generation process, we identify a pivotal step where the dominant singular values of the internal feature encode style-related components. Building upon this insight, we introduce two lightweight control modules: Principal Feature Blending, which enables precise modulation of style through SVD-based feature reconstruction, and Structural Attention Correction, which stabilizes structural consistency by leveraging content-guided attention correction across fine stages. Without any additional training, extensive experiments demonstrate that our method achieves competitive style fidelity and prompt fidelity compared to fine-tuned baselines, while offering faster inference and greater deployment flexibility.

Links

- Paper: arXiv

Citation

1

2

3

4

5

6

@article{lee2025svdstyle,

title={A Training-Free Style-Personalization via SVD-Based Feature Decomposition},

author={Lee, Kyoungmin and Park, Jihun and Gim, Jongmin and Choi, Wonhyeok and Hwang, Kyumin and Kim, Jaeyeul and Im, Sunghoon},

journal={arXiv preprint arXiv:2507.04482},

year={2025}

}